A study that changes how we think about search

Researchers at the University of Toronto ran thousands of queries through ChatGPT, Claude, Perplexity, Gemini, and Google. Same questions, wildly different answers. Not the content of the answers, but where they pulled their information from. In September 2025, they released their research paper named “Generative Engine Optimization: How to Dominate AI Search”. In this post, let me walk you through what the Toronto team actually did.

The experiment design that reveals everything

In August 2025, the research team ran over 1,000 base queries across ten verticals including consumer electronics, automotive, software, and local services. Each query went through all major AI engines plus Google for comparison.

They classified every single source into three categories. Brand sources from company-owned websites. Earned sources from third-party media and review sites. Social sources from Reddit, YouTube, and forums. Simple framework, profound implications.

But here's where it gets interesting. They didn't just run straight queries. They tested paraphrase sensitivity with seven variations of each query to see if slight wording changes affected results. They examined three query types: informational (learning), consideration (comparing), and transactional (buying). They even translated queries into Chinese, Japanese, German, French, and Spanish to understand language effects.

All told, they analyzed over 5,000 query variations and classified thousands of domains. This isn't a handful of anecdotal observations. This is systematic analysis at scale.

The scale of divergence is surprising. When you search Google and when you query AI engines, you're accessing fundamentally different webs. Based on the study, only 10 to 30 percent overlap in the sources they reference. Think about that. Seven out of ten times, the domains cited by AI search don't even appear in Google's top results.

With Perplexity handling 780 million queries monthly and 34 percent of US adults using ChatGPT, we're watching the rules of visibility get rewritten in real time.

The earned media dominance that reshapes everything

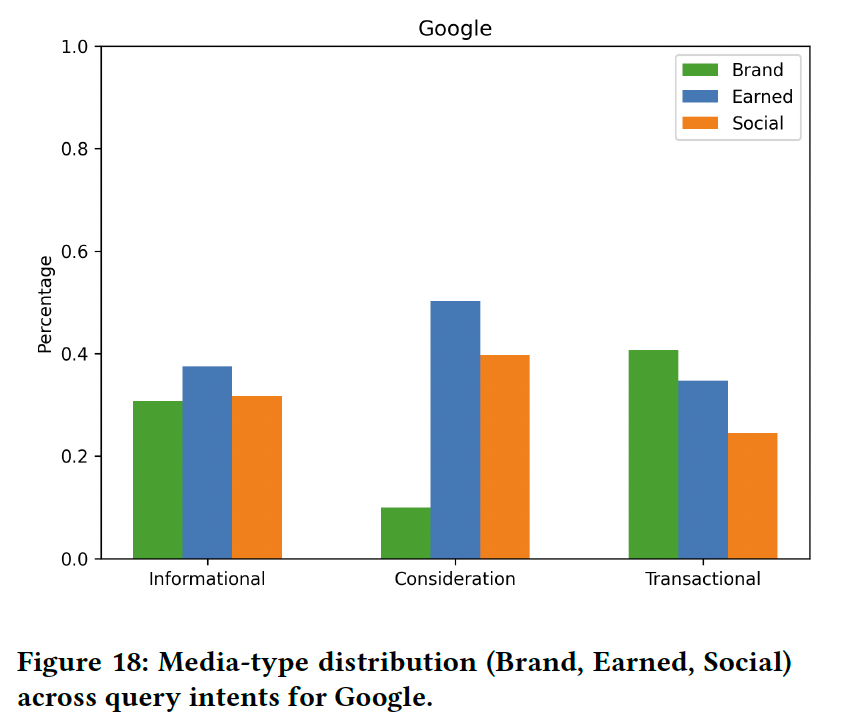

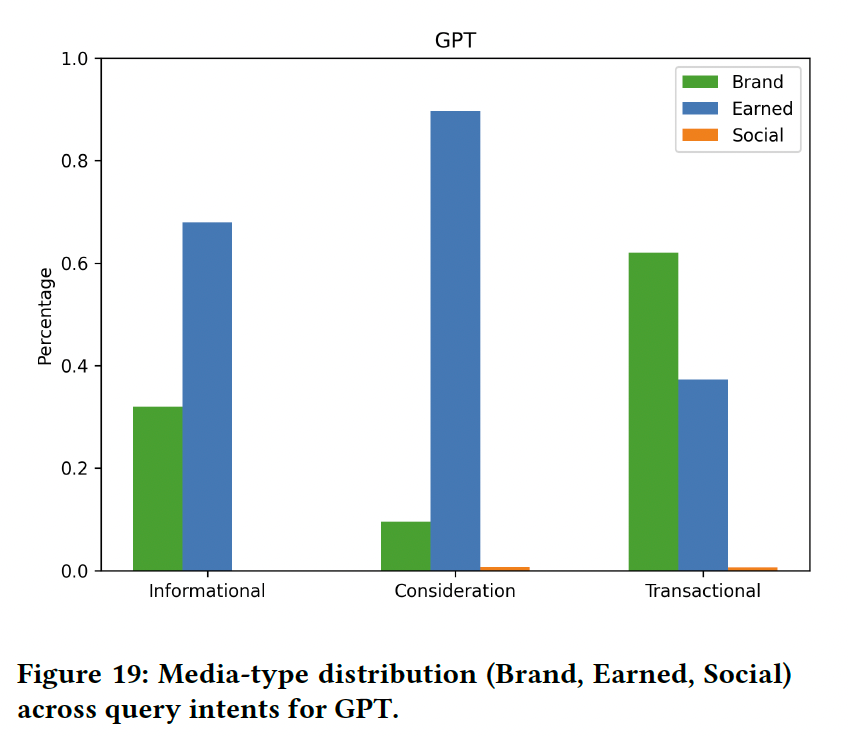

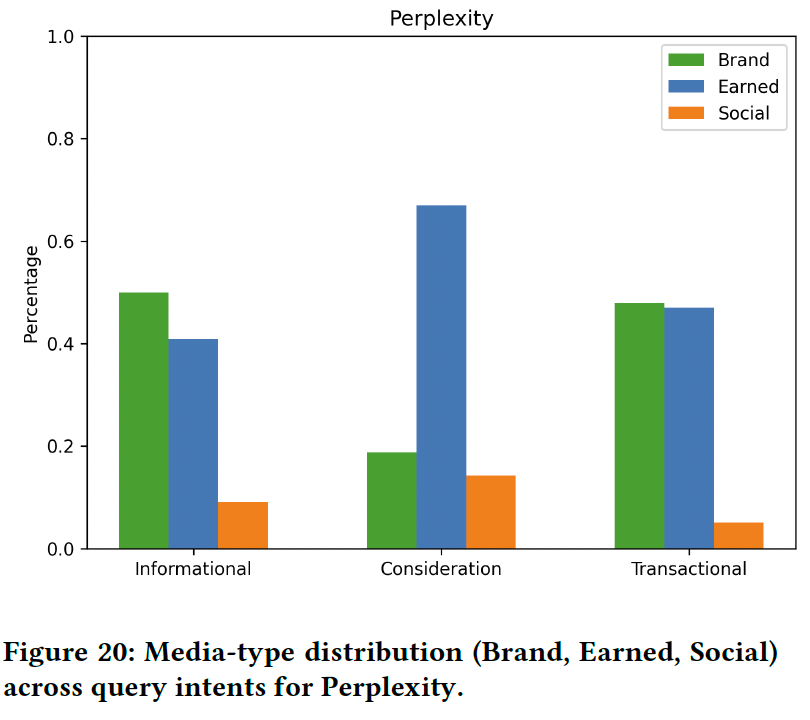

The Toronto team classified every source into three buckets. Brand (company websites), Earned (third-party reviews and media), and Social (Reddit, forums, YouTube). Then they analyzed how these sources get cited across three query types.

Informational queries (learning intent, like "How do OLED TVs work?") show the starkest differences. In general, Google balances all three source types. ChatGPT pulls significantly from earned media. Perplexity appears to favor brand domains for educational content.

Consideration queries (comparing options, like "Best laptops for students 2025") see earned media dominate everywhere. But Google still includes Reddit discussions while ChatGPT almost eliminates social content entirely (surprise!).

Transactional queries (ready to buy, like "Buy iPhone 15 online") trigger a shift. Brand content suddenly matters more, especially for ChatGPT which dramatically amplifies brand sources it previously ignored.

The takeaway is that Google maintains balance across intents. AI engines swing wildly based on what users are trying to do. Your brand content might be less visible for educational queries but critical for purchase decisions. Plan accordingly.

The big brand monopoly in generic searches

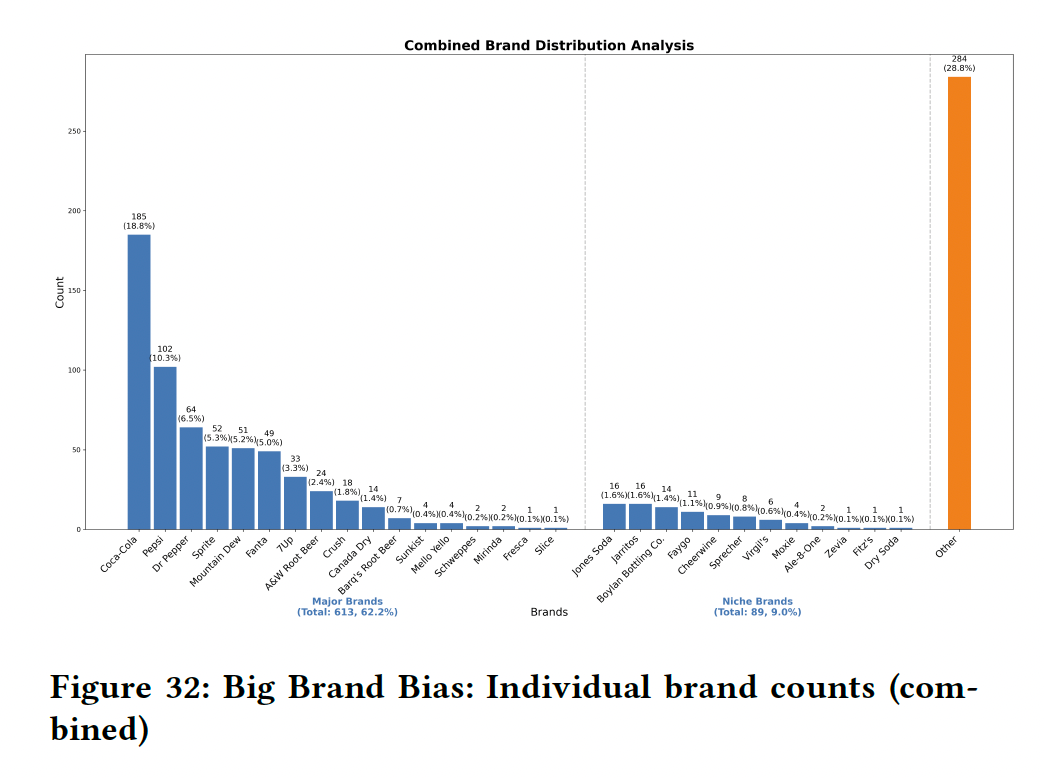

When users ask generic questions without mentioning specific brands, AI engines reveal a striking pattern. The Toronto team tested fifty cola-related queries like "best soda brands" without naming any companies.

Coca-Cola and Pepsi dominated the responses. Together, they captured the majority of all brand mentions, appearing far more than all craft and regional sodas combined. Twenty niche brands the researchers tracked? They barely registered.

Perplexity showed an even stronger bias toward major brands than ChatGPT. While ChatGPT at least occasionally mentioned craft sodas like Jones Soda or Boylan, Perplexity almost exclusively recommended the giants.

What's fascinating is how differently these engines gather their information yet reach similar conclusions. ChatGPT relies heavily on Wikipedia for its knowledge. Perplexity pulls from YouTube, TikTok, and various food sites. Different sources, same outcome: major brands have most of the attention.

For early stage startups, this finding is both a challenge and opportunity. Yes, you can't win generic searches through brand optimization alone. But here's the crucial difference: we're not competing with century-old conglomerates like Coke and Pepsi.

In AI and tech, the "giants" are often just five years ahead of you. Perplexity launched in 2022. Claude was released in 2023. The entire AI application layer is still being written. When someone asks for "best AI coding assistant," there's no hundred-year incumbent. The earned media that positions you as credible could potentially be built in quarters, not decades. The key is moving fast while the category definitions are still fluid.

How different engines see different webs

Here's what really caught my attention. Even among AI engines, there's shocking disagreement about which sources to trust.

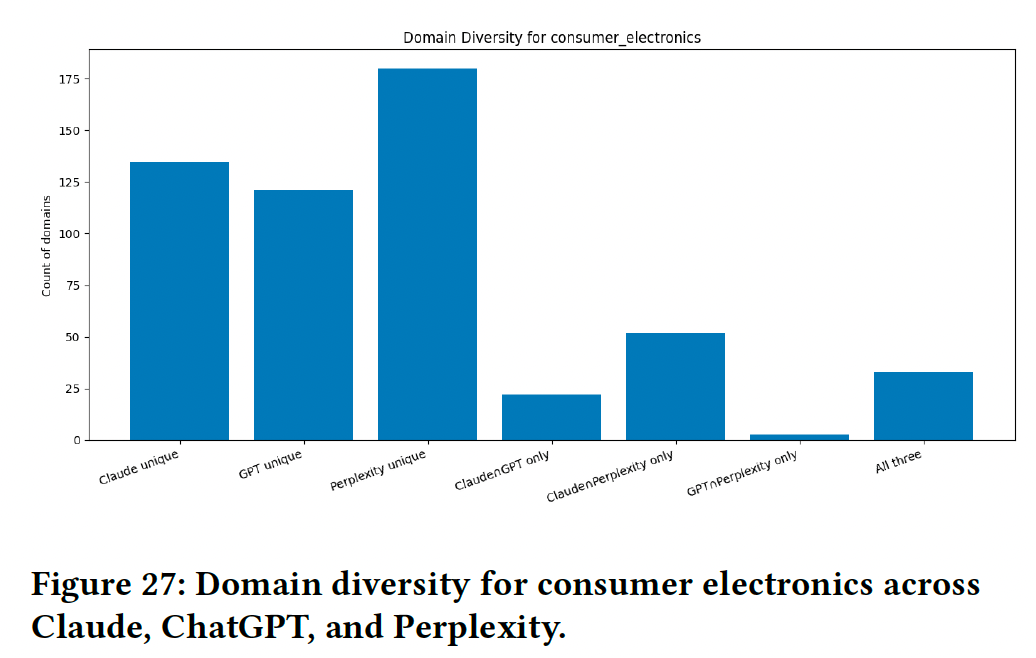

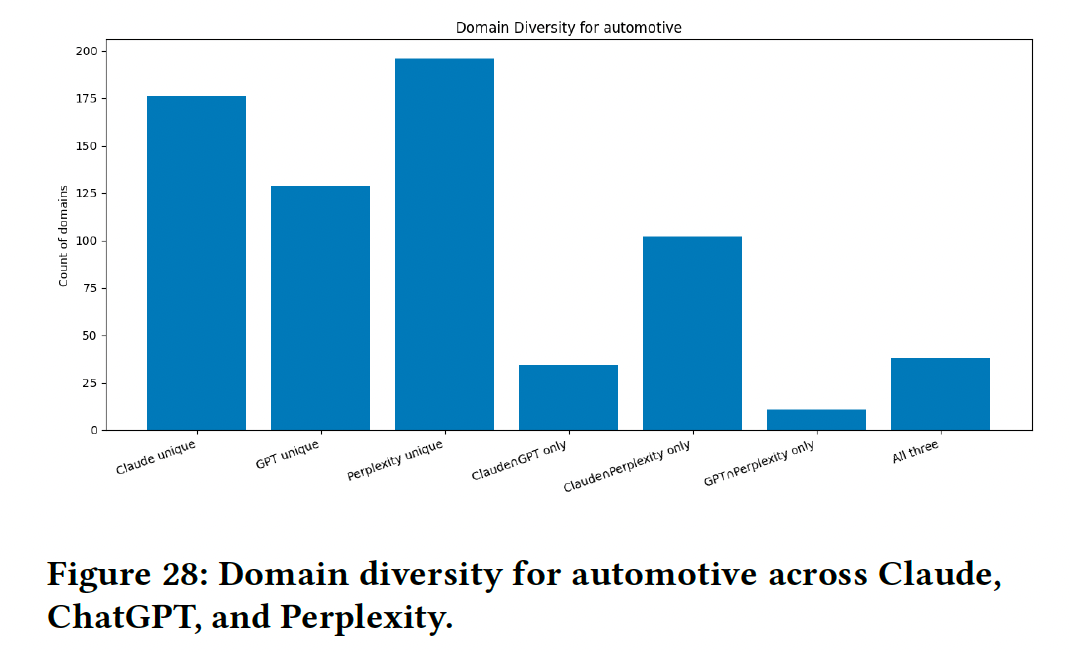

The Toronto team ran identical queries through Claude, ChatGPT, and Perplexity for automotive and consumer electronics. Same questions, completely different source ecosystems.

Claude pulled from hundreds of distinct domains.

ChatGPT used a more selective set.

Perplexity cast the widest net.

What’s surprising is that the overlap between what ChatGPT and Perplexity cite? Almost nonexistent! Less than one in ten sources appear in both. Even Claude and Perplexity, which seem to pull from similar-sized pools, only share about a quarter of their sources.

Each AI engine has essentially built its own version of the web. More than half the domains each engine cites are exclusive to that engine alone. The only consistent overlap? A tiny core of mega-publishers that everyone trusts.

Think about what this means for your optimization strategy. Yes, the mega-publishers appear everywhere. But they're the exception, not the rule. More than half of what each engine cites is completely unique to that engine.

This is where the opportunity lives. While big brands dominate the universal overlap, the vast majority of citations come from each engine's unique source pool. ChatGPT might cite a specific industry blog that Perplexity never touches. Claude might trust a technical forum that ChatGPT ignores. These unique sources aren't fighting to be the next TechRadar. They just need to be valuable to one specific engine's algorithm.

The real insight here is that you don't need to achieve universal AI visibility to win. You need to identify which engines your buyers actually use, then understand the unique sources those specific engines trust. A focused strategy targeting ChatGPT's unique source preferences might deliver better ROI than trying to appear everywhere.

This fragmentation is actually democratizing. It’s very hard for a single competitor to lock down all engines simultaneously. The "big brand advantage" only applies to that tiny universal core. In the vast unique territories each engine maintains, smaller players can establish strong positions by understanding each engine's specific preferences.

The freshness problem hiding in plain sight

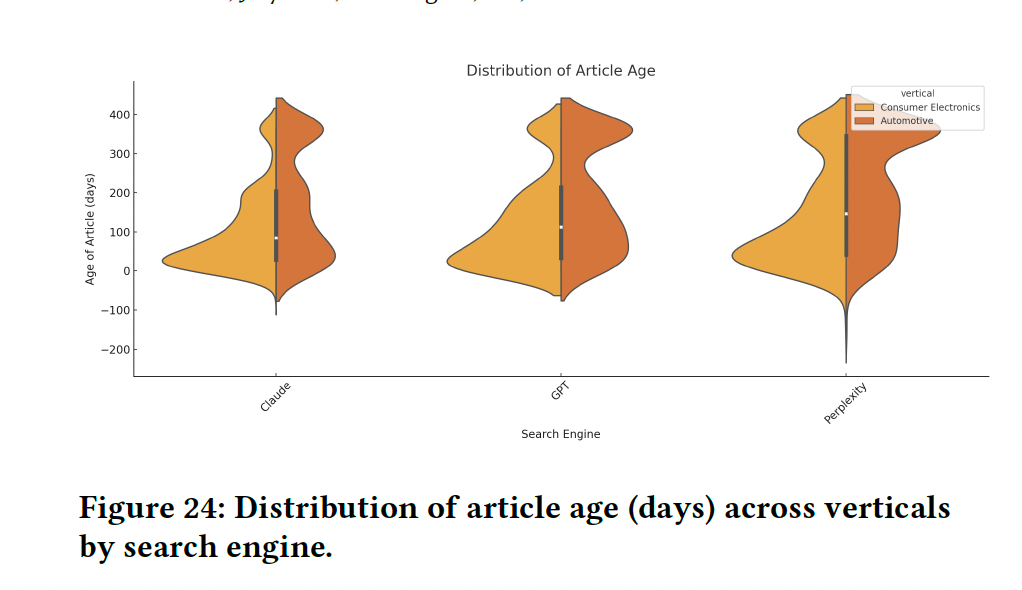

The Toronto team uncovered something that contradicts what everyone assumes about AI search. We all thought AI engines would prioritize the freshest, most current content. Like yesterday, last week, last month.

Wrong.

In consumer electronics, the average article cited is about 4 months old. In automotive, it's nearly a year. Even in fast-moving tech categories, ChatGPT and Claude cite content that's typically 60 to 120 days old. Perplexity pulls slightly fresher content, but we're still talking months, not days.

This completely reframes what "fresh" means for AI search. It's not about publishing yesterday's news or racing to comment on this morning's announcement. AI engines appear to want content that's had time to prove its value. Time to accumulate backlinks. Time to demonstrate authority through engagement and references.

Think about what this means for your content strategy timeline. If Claude is citing content that's on average 112 days old, that's roughly how long your optimization efforts will take to show results. You're not getting cited next week for content you publish today. You're getting cited in Q2 for content you publish in Q1.

This is actually good news for resource-constrained startups. You're not in a daily publishing race against TechCrunch. You're in a quarterly authority-building game. The content you create today needs to be valuable enough that it still matters in three months. The earned media coverage you get this month will peak in impact sometime next quarter.

The automotive data shows another pattern. There are evergreen pieces that could be one to two years old but still get cited because they've become canonical references in their space. The lesson? Some content should be built for permanence, not recency.

Here's the strategic reality: Plan your content calendar three months ahead. What you publish today affects your AI visibility in Q2. The earned media push you're planning for next month won't show full impact until spring. This isn't a bug in AI search. It's a feature that rewards sustained authority over viral moments.

Why small wording changes matter more than you think

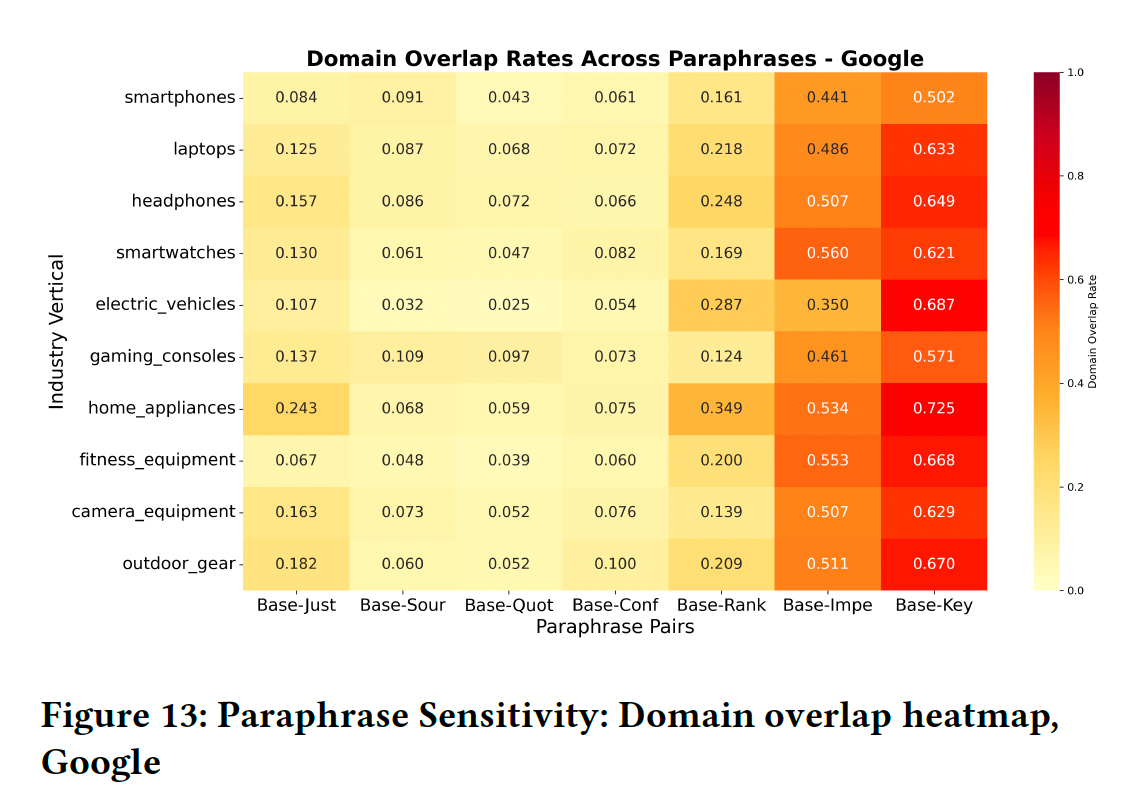

The Toronto team tested something I’ve tried to test (at a smaller scale). They took the same queries and tweaked them slightly. Asked for sources. Required justifications. Changed questions to commands. Seven different ways to ask essentially the same thing.

Google turned out to be surprisingly sensitive to these changes. Rephrase your query even slightly, and Google often returns a completely different set of results. The overlap between results for different phrasings? Often less than 10 percent. The only exception is when you strip queries down to pure keywords or simple commands, Google suddenly becomes consistent.

AI engines behave differently. They're remarkably stable across paraphrases, typically maintaining 30 to 70 percent overlap regardless of how you word the question. They seem to understand intent better than Google's keyword matching.

But here's what matters for optimization. Different phrasings don't just change which sites appear. They shift the entire balance of source types. Ask for "sources" and you'll get more earned media. Frame it as a command and brand content might surface more. These subtle shifts compound across thousands of queries your customers make.

The strategic implication is that your content needs to work for multiple phrasings of the same intent. That comparison table on your product page needs to surface whether someone asks "compare X and Y" or "X versus Y analysis" or "which is better X or Y" or simply types "X Y differences."

For Google, you're still optimizing for keyword variations. For AI engines, you're optimizing for conceptual coverage. Both matter, but they require different approaches. The good news is that AI engines' stability means you don't need to chase every possible phrasing. Focus on comprehensive, intent-matching content rather than keyword permutations.

What this means for your optimization strategy

The Toronto findings demand a fundamental shift in how we approach online visibility. Let me break down what actually works based on this research.

First, embrace the earned media imperative. With AI engines pulling 70 percent of citations from third-party sources, your website alone won't cut it. But this doesn't mean abandoning on-site optimization. Make your content so useful that when AI engines do cite brand sources, you're the obvious choice. Add comparison tables. Create detailed pros and cons lists. Bold your differentiators. Implement comprehensive schema markup. Think of your website as machine-readable evidence that supports what earned media says about you.

Second, understand that each engine lives in its own world. ChatGPT's sources barely overlap with Perplexity's. Claude trusts different domains than Gemini. You're not optimizing for "AI search." You're optimizing for multiple distinct ecosystems. The good news is that this fragmentation means no competitor can dominate everywhere simultaneously. Pick the engines your buyers actually use and focus there.

Third, plan for the timeline reality. If AI engines cite content that's 60 to 120 days old on average, that's your optimization horizon. What you publish today affects Q2 visibility. The earned media push next month shows impact in spring. For a Series A-C startup, this means month one to three for auditing and restructuring existing content, month three to six for earning coverage in publications AI engines trust, and month six to twelve for building sustained media momentum.

Fourth, become a source for sources. The publications AI engines trust need content too. Offer them exclusive data. Provide expert commentary. Create research they'll want to cite. When TechRadar writes about your category, they should quote your insights. This is how you hack the earned media requirement without a massive PR budget.

Fifth, optimize for concepts, not keywords. While Google still gets confused by paraphrase variations, AI engines understand intent. Your comparison content needs to work whether someone asks "X versus Y" or "compare X and Y" or "which is better X or Y." Focus on comprehensive, intent-matching content rather than chasing keyword permutations.

The strategic reality for growth-stage founders is this:

you're not in a daily publishing race. You're in a quarterly authority-building game.

The companies succeeding in AI search didn't start last month. But you don't need years to see improvement. Basic optimizations can shift visibility within 90 days. Strategic earned media placement shows results in a quarter.

This is exactly why systematic GEO optimization matters. You need both brilliant on-site structure for when AI engines do reference brand sources, and strategic earned media placement in the exact publications dominating your category's AI results. One without the other leaves visibility gaps competitors will exploit.

The Toronto research gives us the latest playbook. In fast-moving categories, fresh earned media from this quarter beats year-old TechCrunch coverage. In markets where the giants seem to dominate, remember they only own that tiny universal overlap - the vast unique territories each engine maintains are still winnable. And with AI search users growing from early adopters to 34 percent of US adults, the companies that adapt now will own the next generation of discovery.

Understanding the study's boundaries

The Toronto researchers are transparent about what their analysis can and cannot tell us, and these limitations matter for how we apply the findings.

This snapshot comes from August 2025, in a landscape that evolves monthly. ChatGPT updates its browsing. Perplexity tweaks algorithms. Google expands AI overviews. The specific percentages or the core patterns may change over time, but as your GEO operators, we’ll monitor the space closely and share interpreted insights as soon as they come out!

The three-category classification (Brand/Earned/Social), while logical, has fuzzy edges. Is a sponsored TechCrunch post earned or brand? Different researchers might code it differently. Trust the trends more than the exact percentages.

The team analyzed outputs, not algorithms. They see what happens, not definitively why. They tested consumer categories extensively but B2B software, healthcare, and financial services might show different patterns. Your specific niche could vary.

Perhaps most importantly, they had no access to actual user behavior. We don't know if users click those citations or trust AI recommendations equally across categories. The citation patterns are clear, but their impact on actual buying decisions remains unmeasured.

Despite these boundaries, this remains the most comprehensive analysis of AI search behavior published to date. The patterns are consistent enough and striking enough to guide strategy, even as details continue evolving. Think of it as a map of new territory - not perfect, but infinitely better than navigating blind.

Based on "Generative Engine Optimization: How to Dominate AI Search" by the University of Toronto team (September 2025). Full methodology and findings available in the original paper here: https://arxiv.org/abs/2509.08919?

Frequently asked questions

Q: How is GEO different from traditional SEO?

SEO optimizes for Google's ranked list of links. GEO optimizes for AI-synthesized answers that pull from multiple sources. The key difference: Google rewards your website's authority directly, while AI engines reward third-party validation about you. You need both - SEO gets you into the retrieval set, GEO gets you cited in the answer.

Q: Which AI engine should I prioritize?

Start by analyzing your actual user behavior. If you're B2B, ChatGPT likely dominates. Consumer products might see more Perplexity usage. The Toronto study shows each engine pulls from largely different sources, so focusing on one or two engines where your buyers actually are delivers better ROI than spreading thin across all of them.

Q: How long before I see results from GEO efforts?

Based on the freshness data, AI engines cite content that's typically 60-120 days old. Plan for a 3-month lag. Content published in January affects April visibility. Earned media from February peaks in May. This isn't instant gratification - it's quarterly planning.

Q: What's the minimum viable GEO strategy for a Series A startup?

Three essentials: (1) Add comparison tables and structured data to your top 5 landing pages, (2) Identify the 3-5 publications that dominate your category's AI citations and build relationships there, (3) Track weekly whether you appear in AI search results for your top 10 buying-intent queries. This baseline takes 20 hours to set up, then 2-3 hours weekly to maintain.

Q: How technical is GEO implementation?

There are two levels. Basic tactics like schema markup, comparison tables, and FAQ sections can be implemented with standard web development skills - any developer can add these in a week. Advanced optimization like becoming a trusted data source for publishers or systematic earned media placement requires strategic expertise more than technical skills. Start with the basics, layer in advanced tactics as you grow.

Q: Can small brands compete with established players in AI search?

Yes, but not in generic searches. When someone asks for "best CRM," Salesforce will dominate. But the Toronto data shows 50%+ of each engine's sources are unique to that engine. Focus on long-tail queries, specific use cases, and the unique source pools of your target engine. You don't need to beat Salesforce everywhere - just in the specific contexts your buyers search.

Q: Should I stop creating blog content for my website?

No, but redistribute your effort. The 70/30 rule: spend 70% of content effort on earning third-party coverage and 30% on owned content. Your website content should be citation-ready (comparison tables, clear differentiators, structured data) rather than volume-focused. One perfectly structured comparison page beats ten generic blog posts.

Q: How do I measure GEO success?

Traditional metrics like rankings don't apply. Track: (1) Citation rate - how often you appear in AI responses for target queries, (2) Share of voice - your citations vs competitors, (3) Source diversity - which publications cite you, (4) Query coverage - percentage of buying-intent queries where you appear. Run these checks weekly, analyze trends monthly.

Q: What if my content is great but still not getting cited?

You likely have a discovery problem, not a quality problem. Check if you're even in the retrieval set by looking at whether you rank in the top 20 on Google/Bing for those queries. AI engines can't cite what they can't find. Fix traditional SEO first, then optimize for citation.

Q: Is GEO worth it if only 34% of adults use AI search?

That 34% isn't evenly distributed. In tech, it's likely 60%+. Among decision-makers at growth-stage companies, it could be 80%+. Plus, Perplexity handles 780 million queries monthly and growing. The question isn't whether to optimize for AI search, but whether you can afford to cede this channel to competitors while it's still emerging.

Q: How does language affect my GEO strategy?

Dramatically. ChatGPT completely switches sources by language - almost zero overlap between English and Chinese results. Claude uses English sources regardless of query language. If you're global, you need earned media in each target language for ChatGPT, but strong English coverage works across languages for Claude. Know your market, know your engine.

Q: What's the biggest mistake companies make with GEO?

Treating it like SEO with different keywords. GEO isn't about optimizing your website better. It's about becoming a cited source in the publications AI engines already trust. The biggest mistake is spending all your effort on owned content when AI engines pull 70%+ from earned media.